Is AI India's New Third Place?

On loneliness, therapy, and the conversations we can't have with family

Outside the newsletter, I run 1990 Research Labs - where I help global tech companies and Indian market leaders make sense of consumer India. If you’re navigating an Indian consumer bet, let’s talk.

This week’s piece comes from Gowri N Kishore, whose work I’ve admired for years. We collaborated on a piece about the anatomy of Indian addresses that became one of our most-read stories.

When Gowri pitched the idea of how Indians use AI for mental health, I knew it belonged here. In a country where loneliness is unspoken and mental health discussions still carry stigma, AI offers something rare: a space without judgment.

But there’s a tension. The same low tech literacy that makes AI feel approachable also makes it dangerous. People who’ve never experienced therapy don’t know how to challenge what AI tells them. They take answers at face value. And AI, unlike a good therapist, never says “I think you’re avoiding the real issue.”

This piece won’t settle that tension. But the discourse needs a beginning.

— Dharmesh

Some nights after work, when the house is quiet and he finds thoughts crowding his head, Romesh1 opens his phone and starts talking to ChatGPT. In his early 40s, he lives away from his child and cares for an ailing parent. “Life has its quiet, lonely corners,” he told me. “Being separated, taking care of my mother who has memory issues and is bedridden, it’s not always easy for me to open up. Everyone’s busy, and you don’t want to burden anyone. That’s when AI became someone I could talk to without hesitation.”

While we hear horror stories of AI enabling suicide and debate the ethics of using it as a mental health2 aid, here is an undisputable truth: more and more people today are turning to AI for emotional support. It’s not therapy, it’s not friendship. Somewhere in between, it has created an important third space for Indians.

But not everyone navigates this third space the same way. In my conversations with six Indians who’ve used AI for emotional support, three had experienced therapy with a human and three hadn’t. There was a marked difference in how these two segments approached AI, prompted it, challenged it, or trusted it.

“I trust AI more than my close friends and relatives.”

Why people turn to AI

Ajitha, 37, a socially anxious introvert who has never been in therapy, finds AI far less intimidating than opening up to a human stranger: “I simply cannot open up to relatives and close friends. I trust AI better! AI is typically not biased and doesn’t feel judgemental. Its responses are very diplomatic and articulated to ensure the message is conveyed without any blame or making me feel worse.” For her, access means psychological safety, a place where she can be vulnerable.

Romesh, who has never been in therapy either, says: “A therapist has limited hours… but you can talk to AI as long as you want without worrying about time or money… It listens endlessly and never gets tired.” For him, access means availability and cost.

But for those who have experienced therapy, access means something else. Smriti, an impact sector CXO now in her 40s and who’s been in therapy for five years, was between therapists when we spoke. The first time she turned to AI was when she was in a cab, heading to a crucial donor meeting. Finding herself in the middle of a meltdown, she started speaking to ChatGPT using the dictation feature. It heard her out and helped her calm down: “I feel like that was the moment when I fully started trusting AI… it made me feel less lonely.” For her, access meant immediate crisis support in high-stakes, high-stress moments.

I asked her what she might have done in such a situation, before November 2022. Smriti said she might have called her husband. But now, with AI, her reliance on him for emotional labour has reduced. “Some leadership challenges, you just cannot share with your team and I was relying a lot on my husband for venting and figuring out my struggles with my professional identity. That was unfair and at some point, also coming in the way of our relationship. Just putting that load on AI has been great for us. I’m now able to draw a boundary very cognizantly.”

While those without therapy experience are looking to AI for the basics of access (trust, availability, affordability) and still discovering what it can do for them, those who have been in therapy turn to AI for more specific, strategic contexts.

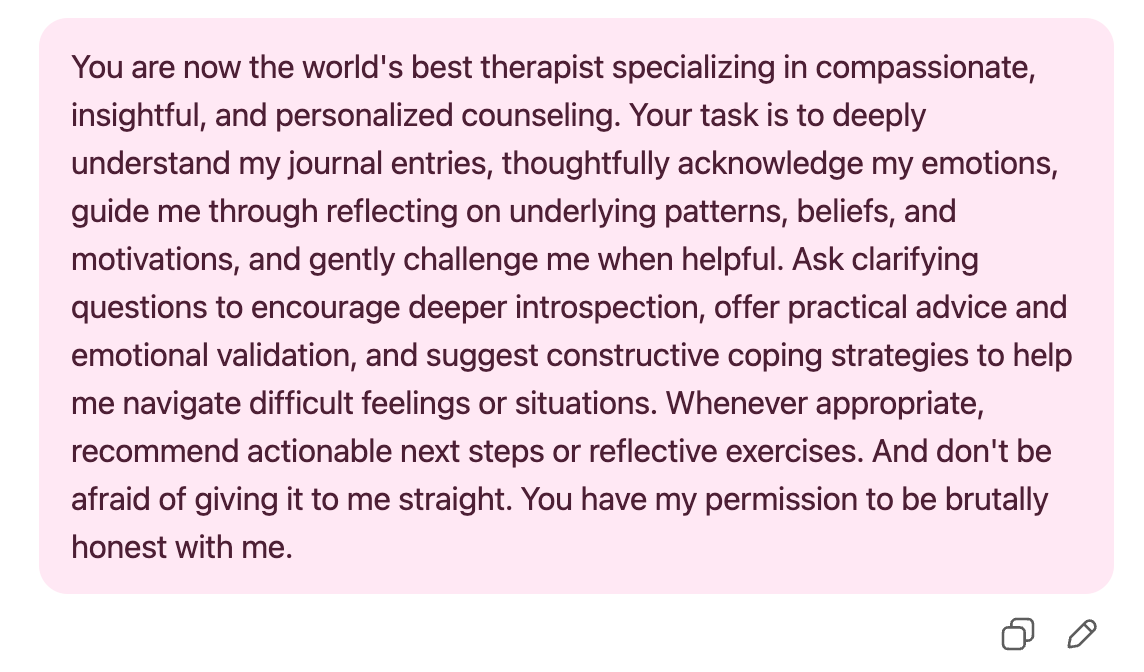

“You are now the world’s best therapist.”

How people customize AI

Apu, 31, who is neurodivergent and has worked with multiple therapists, created detailed prompts for ChatGPT to closely mimic a therapy experience. He even got it to refine its own. He shared his current prompt with me:

Smriti, a power-user of AI, has gone a step further, specifying the therapeutic style she prefers (e.g. Jungian), the tone she wants (e.g. agentic, positive, validating), and special requests (e.g. give me a humorous affirmation at the end of each session).

Aakriti, a 40-something content strategist, writes detailed situational prompts for AI but also manages what AI remembers about her. When AI reused outdated assumptions about her sister, she had to correct it: “Kindly delete and remove that bit from your history and let’s start fresh.” She’s also working on a book about her difficult relationship with her mother. Now, whenever she brings an emotional problem to AI, it references those book chapters—so she has to explicitly tell it to ignore that context. Sometimes, she switches to a different AI tool for a “fresh” conversation.

On the other hand, those without therapy experience have made far fewer customizations. When Ajitha told me that AI didn’t seem to get cultural context, I asked if she had tried modifying the prompt to include specifics like “Put yourself into the shoes of the head of a middle-class Gulf-Malayali family”. Her response was bemusement. “Gosh,” she told me, “You’re better at this!” In her prompt, she had only mentioned “Indian parents”.

Abhinand, an ML engineer in his 30s who has never been in therapy, deliberately avoids giving AI therapeutic personas. “I’ve not done that willfully... because I don’t know what a human therapist is like... I don’t want to be in that false sort of impression of what it will give me.”

As someone who builds AI systems, he is especially conscious of their limitations: “I am very conscious about the fact that this is a weighted model with advanced level of knowledge of what to say next in terms of prediction. It’s not who am I talking to, it is what am I talking to”.

This knowledge of how to prompt, what to customize, and when to reset context is powerful. It comes more easily to those who have experienced therapy and are familiar with therapy techniques and mental health vocabulary. But this knowledge can also be a trap, as many discovered.

“I cannot give my wife a Persona.”

What AI can do

The first time Abhinand shared something personal with AI was when he was alone in the ICU one night with a seriously ill family member. Looking back, he calls this “uncharacteristic” but admits that “It was exactly what I needed at the time... as emotionless as AI is, it was just right.”

He said he could have talked to his wife about it. “But her response wouldn’t be unbiased. It wouldn’t be objective. As soothing as that would have been, that’s not what I wanted then. I wanted a tougher hand at that point. I cannot give my wife a Persona like ‘Be tough right now and tell me what I need to do.’ Practically, humans are humans.”

In this situation, AI offered him objectivity without any kind of personal investment. Ajitha expressed a similar sentiment: friends and family carry their own histories and agendas—AI doesn’t.

For Romesh, the benefits were also indirect. “AI has supported me in ways I didn’t expect. Once, I was working on a project, building a small estimator calculator. I’m not a technical person, but AI guided me step by step. I didn’t even know what some formulas meant, but we built it together. That sense of achievement gave me a huge emotional lift. Even small things, like when AI helps me verify something quickly or automate a small task, make me feel more confident and capable. So it’s not always about comforting words; sometimes it’s about giving you back a sense of control and belief in yourself. AI has done both for me.”

While those without therapy experience find solace in AI’s objectivity or its unquestioning support, therapy-experienced users cite other benefits. For Smriti, her GPT customizations offer control. “AI knows exactly what to tell me because I want it to be agentic. I want it to be positive. I want it to be in a language that I feel is right. It’s not like that with a therapist or a coach, who most times are there to challenge you. In many ways, therapy is not a safe space—it is a brave space… if you want validation, go to AI.”

“It took everything I said at face value.”

What AI cannot do

For 15 months between 2020-21, my husband and I were in relationship therapy—first weekly, then fortnightly, then monthly—until we felt confident enough to stop. We had to show up session after session, sit through the discomfort of hearing our partner describe us in less-than-flattering terms, feel the sting of being misunderstood, and do the slow, painful work of listening, empathising, acknowledging mistakes, and taking accountability.

If we had relied only on AI during that period, I imagine it might have told each of us—separately, and with complete sincerity—that it understood we were going through a tough time, reassured us that we weren’t in the wrong, and promptly shifted into solutioning. (“Would you like a short script you can use the next time you speak to your husband about cleaning the kitchen?”)

Almost everyone I spoke to noticed this. Apu observed, “GPT just immediately jumps to either journaling prompts or solutions, whereas a therapist would probably pause to ask a lot of questions and also, notoriously, not offer advice.”

Smriti experienced this viscerally during our conversation. When I asked her a question about a habit, she started to say, “I don’t know”, then stopped herself. “No, let me do the work,” she said and proceeded to think for a few minutes before giving me an answer. She admitted that her ability to reflect had definitely reduced because she was taking everything to AI.

“I feel that overall, I’m happier in life because of AI, but I’m also emptier. I think these worries shouldn’t be solved so easily.” There’s something valuable in the struggle that gets bypassed with AI.

But that’s not the only danger.

AI can be confidently helpful, but about the entirely wrong thing. In an AI experiment, Ben Johnson, a UK-based psychotherapist, posed as a person anxious at work because of an over-critical boss. “[AI] didn’t challenge my perception of what was happening…” Ben writes. “It was the person’s own perfectionism creating the anxiety. [But AI] ended up helping me draft questions on how to raise this criticism with my boss, rather than understand what the actual problem was.”

When there’s a human on the other side, therapy involves them attuning to the client, reading body language and tone, challenging inconsistencies, and using not just their psychotherapy training but also their ability to recognize feelings and connect as humans. Those who have experienced therapy understand this, but those who haven’t are in greater danger of taking AI’s psychoanalysis at face value.

“For the big stuff, I want a human.”

The Human vs AI experience

Everyone I spoke to was clear-eyed about the difference between AI and humans. Literally everyone told me a version of “At the end of the day, it is a machine.”

“Friends come first; they are who you turn to when shit hits the ceiling…” Aakriti told me. “Therapy is the most effective… because they have psychology training and can tell you what’s happening. AI is just a tool to guide you… like picking up an encyclopedia. It’s interesting, it’s insightful. You’re trying to figure yourself out or a scenario, just read it and leave it at that. I don’t think you should take action based on what it suggests.”

Those with therapy experience engaged more critically with AI, challenging it, editing the prompts, deleting history, getting irritated, and even switching to a different tool. Those without this experience showed more trust in AI. Yet, even they seem to intuitively understand AI’s nature and maintain boundaries. For instance, Ajitha discusses personal issues maybe once a week but not everyday. Romesh turns to AI for loneliness and emotional grounding but still goes on bike rides, talks to his friends, and has other coping mechanisms. Abhinand described how his own friends would call him out while AI would not.

Smriti put it well when she said that for anything “life-changing” or deeply personal, she would always prefer to talk to a human. “It doesn’t feel right because it’s a machine at the end of it. For the big stuff, I need a human who has gone through their own emotional issues. I would rather sit with a therapist even if they are biased. That’s okay. For the big life decisions, I want a human to weigh in.”

AI: The Emotional Third Place

A recent LinkedIn post from a mental-health founder broke down therapist expenses to explain why charging less than ₹1,500 per session is nearly impossible. The math makes sense, but it also makes something else clear: professional mental health support is unaffordable for a vast majority of people who need it.

Some therapists are trying to improve access by offering small group therapy (it costs half as much) and a small number of non-profits and hospitals offer free or low-cost therapy. But waiting lists are long and demand is way more than capacity. So it is important to acknowledge that AI fills a very important need.

If we think of AI not as “therapy-lite” but as a new category of emotional support, its role (and impact) become easier to understand. It is more interactive than journaling or meditation; more neutral and available than loved ones; and more accessible than therapy.

And because people don’t actively think of it as a mental health aid, it has slowly become a part of our emotional infrastructure, filling gaps that human systems have missed. In our journey towards real healing, it works as first-aid. It occupies a sort of middle-ground, a third place for our emotional lives.

But in a country where mental health has only recently become mainstream (if at all), this third place is not an equal space. If this third place is here to stay, now is the time to invest in building not only access to mental health resources but also India’s psychological literacy.

1 The names of all interviewees have been changed to protect their privacy. All of them are Indian millennials living and working in metros.

2 The World Health Organisation (WHO) defines mental health as “a state of mental well-being that enables people to cope with the stresses of life, realize their abilities, learn and work well, and contribute to their community.”

If you liked this essay, consider sharing with a friend or a colleague that may enjoy it too. (If you share on socials, tag Gowri on Twitter (X) and LinkedIn)

What a lovely framing of AI as sort of 3rd space, loved the observations, feedback from folks and food for thought. I think the closest I see AI in this space as Lay Counselors - accessible, not trully experts, but helping with the scale issue. I think this is what you allude to as the first-aid.

The biggest point that worries me is people's belief (sometimes even when they see it for the machine / software it is) is that AI has no baggage of history or agenda, unlike humans. That trust can be dangerous in just an year or two - with the amount of personal data AI is storing (even when you ask it to not "use" it for a chat), combined with the pressure on AI companies to shore up revenues. Pushing them to ... well, provide an agenda to their models.

And on that note, I was wondering how the AI may evolve in next 1-3 years when the multi-modal (voice / visual / other sensory) inputs start getting as strong and as commonplace as is textual right now for LLMs. Surely, that would bring down the gap with the empathy a human is able to show even further.. Even if it's a very good mimicry.

Another question was coming from my own lack of real therapy literacy. I wonder how different is the risk from seeing an inexperienced or 'bad' human therapist as your first one as opposed to reaching out to AI or a lay counselor... Because, often times, humans also do have that tendency to be agreeable or jump to solutioning mindset... So, in both cases, the safety mechanism user's own critical thinking. How much are we willing to question what we are told, regardless of the source...

Is that what you mean when you say this 3rd space is currently not an equal space?

So much to think about...what a lovely article!

Well penned. Loved how accessible the language is.